Optimal transport (OT) distances are increasingly used as loss functions for statistical inference, notably in the learning of generative models or supervised learning. For OT ideas to continue to bear fruit in ML, it will be necessary to tackle longstanding challenges, from both statistical and computational points of view: the computation is notoriously expensive, and the plug-in estimation suffers from the curse of dimensionality. Therefore, the aforementioned applications should, in principle, somewhat fail in high-dimensional setting despite being theoretically motivated. Although assuming smoothness of the underlying densities may help, the computational complexities of these estimators degrade exponentially with the dimension. In practice, these issues do play a role since they entail a lack of robustness and instability with respect to inputs.

Update

Researchers

- Tianyi Lin, UC Berkeley, https://tydlin.github.io/

- Michael I. Jordan, UC Berkeley, https://people.eecs.berkeley.edu/~jordan/

- Marco Cuturi, CREST-ENSAE and Google Brain, Paris, https://marcocuturi.net/

Overview

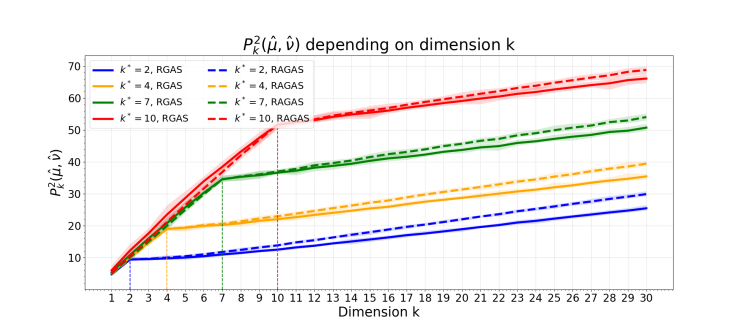

Paty and Cuturi in 2019 proposed to seek the k-dimensional subspace (k > 1) that maximizes the OT distance between two measures after projection, resulting in a projec- tion robust Wasserstein (PRW) distance, providing a generalization of the popular sliced Wasserstein distance. During the past year, we have focused on statistical and computational properties of the plug-in estimation of PRW and derived a bunch of results, including dimension-free sample complexity, consistency and central-limit theorem under model misspecification, nonconvex max-min computational model and provably efficient algorithms based on Riemannian optimization (NeurIPS’20 + AISTATS’21 papers). We will extend these works by studying their differentiability, in order for them to fit naturally in the OTT toolbox

Some very recent works have proposed an alternative approach: their dimension-free computational upper bound was established using an interior-point method (IPM) to solve a large-scale conic problem. We find great opportunity in replacing IPMs with new algorithms, able to exploit the problem structure and bypass the computation, storage, and factorization of a large-scale Schur complement matrix. Our plan includes: (1) Find the dimension-free statistical estimators for multimarginal OT (MOT) (and as an application, Wasserstein barycenter problem (WBP)) (MOT has been recognized as the backbone of numerous applications). (2) Develop efficient algorithms for new estimators for OT, MOT and WBP and demonstrate their practical implications in ML.

Potential for Collaboration

These projects build on several previous projects on algorithmic OT in collaboration with Google researcher M. Cuturi, developing provably efficient algorithms for OT, MOT and WBP and providing novel computational hardness results for fixed-support WBP (3 published, 1 submitted works together with T. Lin and M.I. Jordan). An important expected outcome will be to improve the OTT toolbox with new algorithms.

Links

- Contact email: darren_lin@eecs.berkeley.edu

- Projection Robust Wasserstein Distance and Riemannian Optimization, NeurIPS’20 (Spotlight), https://arxiv.org/abs/2006.07458

- On Projection Robust Optimal Transport: Sample Complexity and Model Misspecification, AISTATS’20, https://arxiv.org/abs/2006.12301