Abstract

The need for automated optimization has become very important in many domains including hyper-parameter tuning in ML or in manufacturing industry. In practice, one frequently has to solve similar optimization problems for a specific customized setting, e.g. manufacturing robots optimized for a new customer environment or hyper-parameter optimization for a new classification task. In these situations, it is often expensive to run real-time experiments for optimization and is often preferred to leverage prior knowledge obtained by previously collected data from similar optimization problems. In this project we aim to develop sample-efficient Bayesian Optimization (BO) framework that can re-use the knowledge from multiple data sources.

Overview

We assume we have several datasets D1, ..., Dk drawn from corresponding functions f1, ..., fk, and we want to use these datasets to accelerate BO on a new function. We consider both synthetic controlled environments as well as real-world optimization problems that can benefit from the proposed setup.

In the synthetic case, we prepare parameterized functions f(x, t) and create k datasets by sampling control parameters t to create k functions. We create the auxiliary datasets by sampling N different random samples from each fk. We see how efficient we can optimize an unseen function. One of the real-world scenarios is using previously solved hyper-parameter optimization problems for specific range of classification task to solve a new one. Our proposal is to employ pre-trained wam-started models (Neural networks / Neural Processes) and combine them with cold-started models (GPs) through novel acquisition functions and improve the quality of the information obtained from evaluating the next candidate in the a Bayesian Optimization setup.

Researchers

Kourosh Hakhamaneshi, Berkeley

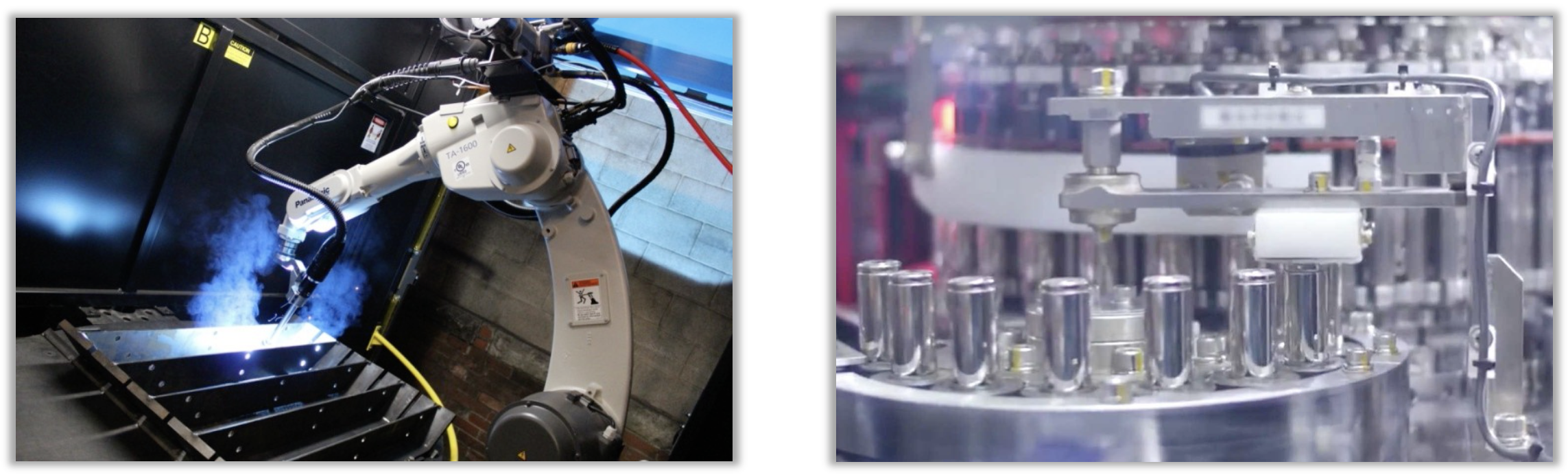

Iku Ohama, Panasonic

Luca Rigazio, Panasonic

Vladimir Stojanovic, Berkeley

Pieter Abbeel, Berkeley