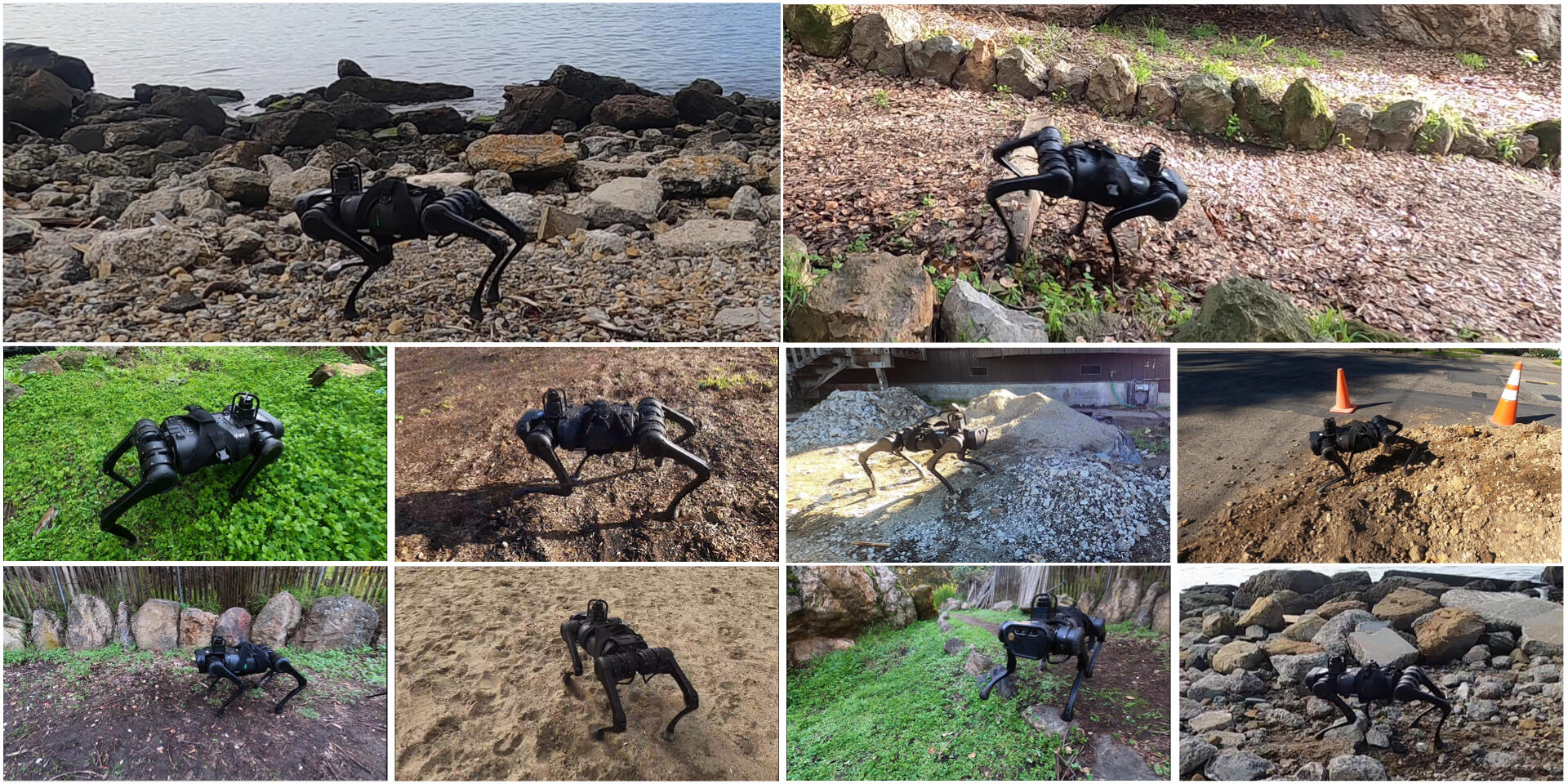

Figure Description: We demonstrate the performance of our new algorithm RMA in several challenging environments. The robot is successfully able to walk on sand, mud, hiking trails, tall grass, and dirt pile without a single failure in all our trials. The robot was successful in 70% of the trials when walking down stairs along a hiking trail, and succeeded in 80% of the trials when walking across a cement pile and a pile of pebbles. The robot achieves this high success rate despite never having seen unstable or sinking ground, obstructive vegetation, or stairs during training. All deployment results are with the same policy without any simulation calibration or real-world fine-tuning

While locomotion has been an active area of robotics research for several decades, it has primarily been investigated as a function of proprioceptive (i.e., knowledge of self) signals. Sensory information, like vision or depth perception, which captures the state of the world is treated as an adjunct to the locomotion ability of the agent and is incorporated later at the planning stage on top of perfect locomotion. While proprioception could be enough to learn locomotion gaits on flat terrain, lack of perception makes it extremely hard to adjust the learned gaits, or a combination of them, to uneven terrains without having to re-plan at each footstep. Moreover, this is in contrast to how human children approach locomotion where vision is intrinsically tied to the form of locomotion (e.g. crawl, walk, run) and plays a key role in learning flexible locomotion behavior.

Final Update:

We have developed a new algorithm Rapid Motor Adaptation (RMA) algorithm to solve this problem of real-time online adaptation in quadruped robots. RMA consists of two components: a base policy and an adaptation module. The combination of these components enables the robot to adapt to novel situations in fractions of a second. RMA is trained completely in simulation without using any domain knowledge like reference trajectories or predefined foot trajectory generators and is deployed on the A1 robot without any fine-tuning. We train RMA on a varied terrain generator using bioenergetics-inspired rewards and deploy it on a variety of difficult terrains including rocky, slippery, deformable surfaces in environments with grass, long vegetation, concrete, pebbles, stairs, sand, etc. RMA shows state-of-the-art performance across diverse real-world as well as simulation experiments. Project Page: at https://ashish-kmr.github.io/rma-legged-robots/

Publication: Kumar, A., Fu, Z., Pathak, D., & Malik, J. RMA: Rapid motor adaptation for legged robots. RSS 2021.

Link: http://www.roboticsproceedings.org/rss17/p011.pdf

Project Page: https://ashish-kmr.github.io/rma-legged-robots/

Closing Report: bair_commons_update.pdf

Researchers

-

Ashish Kumar, UC Berkeley

-

Deepak Pathak, CMU

-

Stuart Anderson, FAIR

-

Jitendra Malik, UC Berkeley/FAIR

Overview

The goal of this work is to demonstrate robust locomotion of a quadruped robot on an arbitrary terrain using proprioception and vision as on-board sensors. The existing work in the field is limited to regular terrain, such as flat surfaces or stair-cases [2,3,4,8,10,12]. The few works which do demonstrate locomotion in realistic settings instrumenting the place with sensors to fully map it. This approach requires a setup cost to generalize to a new scene, limiting its applicability. We instead intend to use an onboard vision sensor on the agent to create a local terrain map of the space.

Moreover, works that use onboard sensors usually have a blind agent that cannot react to external uncertainties. Thus, to climb stairs, the agent should externally be told when to start the gait to walk on stairs. Some works which do use onboard sensors for locomotion primarily solve the foot placement problem [7], but have demonstrated their performance in relatively controlled variations, as opposed to in the wild. In contrast, onboard vision should allow to automatically generalize to arbitrary terrain. Some works, like [9], which do demonstrate arbitrary terrain have done so with a hexapod, which is a more stable system but also less agile. In contrast, our focus is on quadruped robots on real terrains. We plan to use learning to solve this problem. In particular, we will train an agent in simulation and then transfer the learned agent to the real world.

Figure: (Left) Flat Terrain Sim without Depth, (Center) Uneven Terrain Sim with Depth, (Right) Real Robot - A1

First update of the project: We are using the A1 robot for our experiments. We have some preliminary results in uneven terrain using depth and proprioception, and we are now trying to port the policy to the real robot A1.

Links

[1] Adolph, Karen E., et al. "Learning in the development of infant locomotion." Monographs of thesociety for research in child development (1997)

[2] Brooks, Rodney A. "A robot that walks; emergent behaviors from a carefully evolved network."Neural computation 1.2 (1989): 253-262.

[3] Hwangbo, Jemin, et al. "Learning agile and dynamic motor skills for legged robots." arXiv preprintarXiv:1901.08652(2019).

[4] Haarnoja, Tuomas, et al. "Learning to walk via deep reinforcement learning." arXiv preprintarXiv:1812.11103(2018).

[5] Kretch, Kari S., and Karen E. Adolph. "Cliff or step? Posture-specific learning at the edge of a dropoff." Child development 84.1 (2013): 226-240.

[6] Karasik, Lana B., Catherine S. Tamis-LeMonda, and Karen E. Adolph. "Decisions at the brink:locomotor experience affects infants’ use of social information on an adjustable drop-off." Frontiers inpsychology 7 (2016): 797.

[7] Villarreal, Octavio, et al. "Fast and Continuous Foothold Adaptation for Dynamic Locomotion Through CNNs." IEEE Robotics and Automation Letters 4.2 (2019): 2140-2147.

[8] Clavera, Ignasi, et al. "Learning to adapt: Meta-learning for model-based control." arXiv preprintarXiv:1803.11347 3 (2018).

[9] Nagabandi, Anusha, et al. "Learning Image-Conditioned Dynamics Models for Control of Underactuated Legged Millirobots." 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2018.

[10] Fankhauser, Péter, et al. "Robust rough-terrain locomotion with a quadrupedal robot." 2018 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2018.

[11] Matthis, Jonathan Samir, Jacob L. Yates, and Mary M. Hayhoe. "Gaze and the control of foot placement when walking in natural terrain." Current Biology 28.8 (2018)

[12] Lee, Joonho, et al. "Learning quadrupedal locomotion over challenging terrain." Science robotics 5.47 (2020).